When the data volume reaches the scale of millions or tens of millions, how can a leaderboard be implemented by Java?

Introduction

Over the years, many development teams stumbled when trying to implement leaderboard functionality. Today, I'd like to share several different leaderboard Java implementation strategies — ranging from simple to complex, from single-machine solutions to distributed architectures — in the hope of helping you make better decisions in real-world projects.

Some java developers might think, "Isn't it just a leaderboard? Just sort the data in the database!" But in reality, it's far more complicated than that. When the data volume reaches millions or even tens of millions, a simple database query can easily become the system's bottleneck.

In the following sections, I’ll walk you through several approaches in detail. Hopefully, this will be useful to you.

Direct Database Sorting

Use Case: Small data volume (less than 100,000 records), low real-time requirements

This is the simplest and most straightforward solution — it's usually the first method that comes to every developer’s mind.

Here’s a sample code snippet:

public List<UserScore> getRankingList() {

String sql = "SELECT user_id, total_score FROM user_scores ORDER BY total_score DESC LIMIT 100";

return jdbcTemplate.query(sql, new UserScoreRowMapper());

}

Advantages:

-

Simple to implement

-

Low code maintenance cost

-

Suitable for scenarios with small data volume

Disadvantages:

-

Performance degrades sharply with large data volumes

-

Each query requires a full table scan

-

High database pressure under heavy concurrency

Cache Plus Scheduled Task

Use Case: Medium data volume (hundreds of thousands of records), acceptable delay of a few minutes

This approach builds on the first solution by introducing a caching mechanism.

Example code:

@Scheduled(fixedRate = 10000) // Executes every 10 seconds

public void updateRankingCache() {

List<UserScore> rankings = userScoreDao.getTop100Scores();

redisTemplate.opsForValue().set("ranking_top_100_list", rankings);

}

public List<UserScore> getRankingList() {

return (List<UserScore>) redisTemplate.opsForValue().get("ranking_top_100_list");

}

Advantages:

-

Reduces database load

-

Fast query speed (O(1))

-

Relatively simple to implement

Disadvantages:

-

Data is delayed (depends on task frequency)

-

Higher memory usage

-

Rankings are not updated in real time

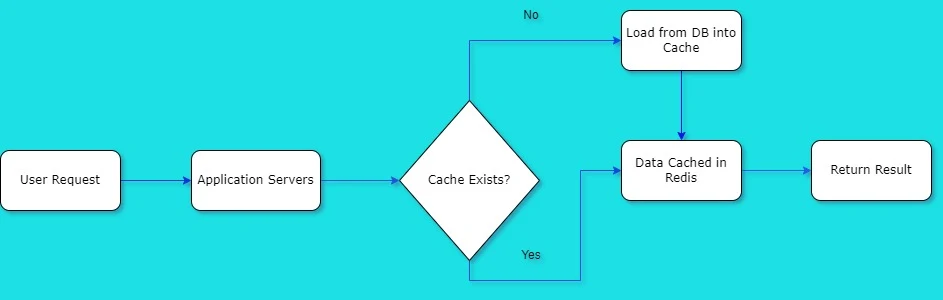

The architecture diagram is as follows:

Redis Sorted Set

Use Case: Large data volume (millions), requiring real-time updates

Redis’s Sorted Set is a powerful tool for implementing leaderboards.

Example code:

public void addUserScore(String userId, double score) {

redisTemplate.opsForZSet().add("top_ranking", userId, score);

}

public List<String> getTopUsers(int topN) {

return redisTemplate.opsForZSet().reverseRange("top_ranking", 0, topN - 1);

}

public Long getUserRank(String userId) {

return redisTemplate.opsForZSet().reverseRank("top_ranking", userId) + 1;

}

Advantages:

-

High performance (O(log(N)) time complexity)

-

Supports real-time updates

-

Naturally supports pagination

-

Can retrieve user ranking

Disadvantages:

-

Limited memory on a single Redis instance

-

Need to consider Redis persistence

-

Additional handling required in distributed environments

Sharding + Redis Cluster

Use Case: Ultra-large scale data (tens of millions or more), high concurrency scenarios

When a single Redis instance cannot meet the requirements, a sharding solution can be adopted.

Example code:

public void addUserScore(String userId, double score) {

RScoredSortedSet<String> set = redisson.getScoredSortedSet("ranking:" + getShard(userId));

set.add(score, userId);

}

private String getShard(String userId) {

// Simple hash-based sharding

int shard = Math.abs(userId.hashCode()) % 16;

return "shard_" + shard;

}

Here we take Redisson client as an example.

Advantages:

-

Strong horizontal scalability

-

Supports ultra-large scale data

-

Stable performance under high concurrency

Disadvantages:

-

High architectural complexity

-

Cross-shard queries are difficult

-

Need to maintain sharding strategy

The architecture diagram is as follows:

Precomputation + Layered Caching

Use Case: Leaderboard updates infrequently, but with very high traffic

This approach combines pre-computation with multi-level caching.

Example code:

@Scheduled(cron = "0 0 * * * ?") // Runs once every hour

public void precomputeRanking() {

Map<String, Integer> rankings = calculateRankings();

redisTemplate.opsForHash().putAll("ranking:hourly", rankings);

// Synchronize to local cache

localCache.putAll(rankings);

}

public Integer getUserRank(String userId) {

// 1. Check local cache first

Integer rank = localCache.get(userId);

if (rank != null) return rank;

// 2. Then check Redis

rank = (Integer) redisTemplate.opsForHash().get("ranking:hourly", userId);

if (rank != null) {

localCache.put(userId, rank); // Backfill local cache

return rank;

}

// 3. Finally check the database

return userScoreDao.getUserRank(userId);

}

Advantages:

-

Extremely high access performance (local cache O(1))

-

Reduces pressure on Redis

-

Suitable for read-heavy, write-light scenarios

Disadvantages:

-

Poor data real-time accuracy

-

High resource consumption for precomputation

-

High implementation complexity

The architecture diagram is as follows:

Real-Time Computation + Stream Processing

Use Case: Social platforms requiring real-time updates with massive data volume

This solution uses stream processing technologies to implement a real-time leaderboard. Below is an example using Apache Flink:

DataStream<UserAction> actions = env.addSource(new UserActionSource());

DataStream<Tuple2<String, Double>> scores = actions

.keyBy(UserAction::getUserId)

.process(new ProcessFunction<UserAction, Tuple2<String, Double>>() {

private MapState<String, Double> userScores;

public void open(Configuration parameters) {

MapStateDescriptor<String, Double> descriptor =

new MapStateDescriptor<>("userScores", String.class, Double.class);

userScores = getRuntimeContext().getMapState(descriptor);

}

public void processElement(UserAction action, Context ctx, Collector<Tuple2<String, Double>> out) {

double newScore = userScores.getOrDefault(action.getUserId(), 0.0) + calculateScore(action);

userScores.put(action.getUserId(), newScore);

out.collect(new Tuple2<>(action.getUserId(), newScore));

}

});

scores.keyBy(0)

.process(new RankProcessFunction())

.addSink(new RankingSink());

Advantages:

-

True real-time updates

-

Handles extremely high concurrency

-

Supports complex computation logic

Disadvantages:

-

High architectural complexity

-

High operations and maintenance cost

-

Requires a specialized engineering team

The architecture diagram is as follows:

| Solution | Data Volume | Real-Time | Complexity | Suitable Scenarios |

|---|---|---|---|---|

| Database Sorting | Small | Low | Low | Personal projects, small-scale applications |

| Cache + Scheduled Tasks | Medium | Medium | Medium | Small to medium applications, delay is acceptable |

| Redis Sorted Set | Large | High | Medium | Large applications requiring real-time updates |

| Sharding + Redis Cluster | Extra Large | High | High | Ultra-large applications with extremely high traffic |

| Precomputation + Layered Cache | Large | Medium-High | High | Read-heavy, write-light scenarios with massive traffic |

| Real-Time Computation + Stream Processing | Extra Large | Real-Time | Very High | Social platforms requiring real-time ranking |

When choosing a leaderboard implementation strategy, we need to consider the following key factors:

Data Volume: The size of the dataset directly influences the choice of solution.

Real-Time Requirements: Is second-level update necessary, or is minute- or even hour-level delay acceptable?

Concurrency: What is the expected level of system access or traffic?

Development Resources: Does the team have sufficient technical capability to maintain a complex solution?

Business Requirements: Is the leaderboard computation logic simple or complex?

For most small to medium-sized applications, Cache plus Scheduled Tasks) or Redis Sorted Set is usually sufficient.

If the business grows rapidly, it can gradually evolve to Sharding + Redis Cluster.

For real-time update scenarios, such as social media platforms, Pre-computation + Layered Cache or Real-Time Computation + Stream Processing should be considered—though they require solid technical preparation and robust architectural design.

Finally, regardless of which solution is chosen, it’s crucial to implement proper monitoring and performance testing.

As a high-frequency feature, leaderboard performance directly impacts user experience.

It is strongly recommended to conduct stress testing in real-world environments and adjust the solution based on the test results.

Comments

Post a Comment